LLaMA-Omni: A Novel AI Model Architecture Designed for Low-Latency and High-Quality Speech Interaction with LLMs

Large language models (LLMs) have emerged as powerful general-purpose task solvers, capable of assisting people in various aspects of daily life through conversational interactions. However, the predominant reliance on text-based interactions has significantly limited their application in scenarios where text input and output are not optimal. While recent advancements, such as GPT4o, have introduced speech interaction capabilities with extremely low latency, enhancing user experience, the open-source community still needs comprehensive exploration in building speech interaction models based on LLMs. The pressing challenge that researchers are striving to solve is how to achieve low-latency and high-quality speech interaction with LLMs, expanding their accessibility and applicability across diverse usage scenarios.

Several approaches have been attempted to enable speech interaction with LLMs, each with limitations. The simplest method involves a cascaded system using automatic speech recognition (ASR) and text-to-speech (TTS) models. However, this sequential approach results in higher latency due to the stepwise processing of transcribed text, text response, and speech response. Multimodal speech-language models have also been proposed, discretizing speech into tokens and expanding LLM vocabularies to support speech input and output. While these models theoretically allow direct speech-to-speech generation with low latency, practical implementation often involves generating intermediate text to maintain higher quality, sacrificing some response speed. Other attempts include training language models on semantic or acoustic tokens, joint training of speech tokens and text, and adding speech encoders to LLMs. However, these methods often require substantial data and computational resources or focus solely on speech understanding without generation capabilities.

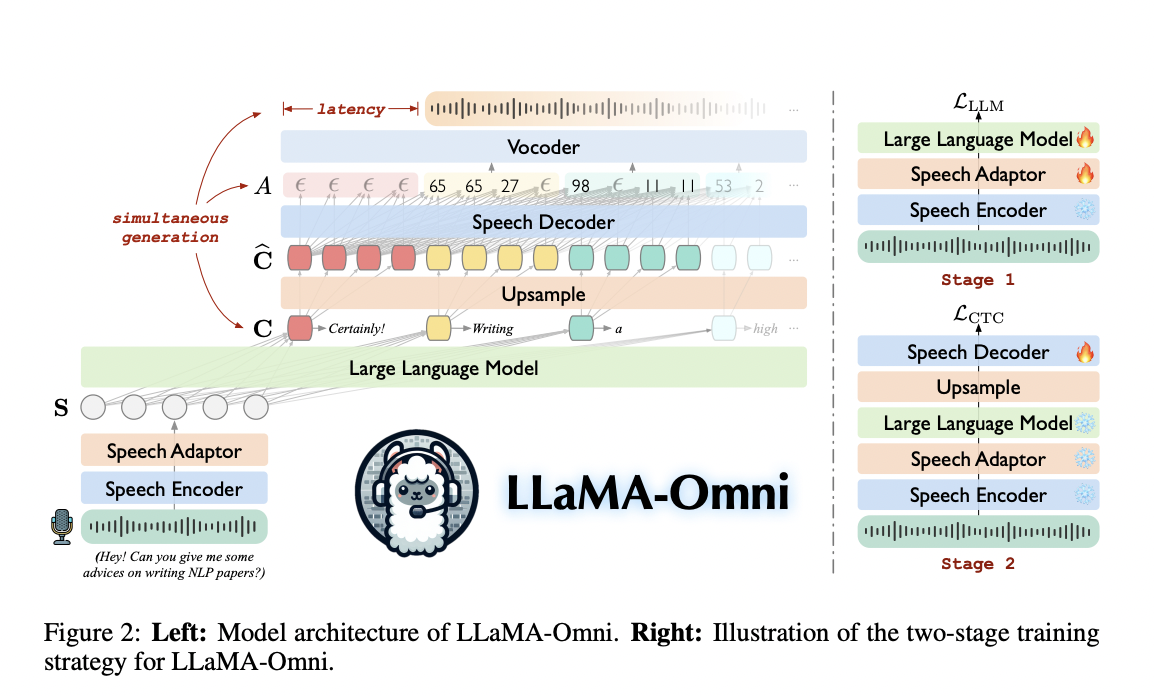

Researchers from the University of Chinese Academy of Sciences introduced LLaMA-Omni, an innovative model architecture, that has been proposed to overcome the challenge of achieving low-latency and high-quality speech interaction with LLMs. This innovative approach integrates a speech encoder, speech adaptor, LLM, and streaming speech decoder to enable seamless speech-to-speech communication. The model processes speech input directly through the encoder and adaptor before feeding it into the LLM, bypassing the need for intermediate text transcription. A non-autoregressive streaming Transformer serves as the speech decoder, utilizing connectionist temporal classification to predict discrete units corresponding to the speech response. This architecture allows for the simultaneous generation of text and speech outputs, significantly reducing response latency. To support the development and evaluation of this model, researchers created the InstructS2S-200K dataset, tailored specifically for speech interaction scenarios.

LLaMA-Omni’s architecture consists of four main components: a speech encoder, a speech adaptor, an LLM, and a speech decoder. The speech encoder, based on Whisper-large-v3, extracts meaningful representations from the user’s speech input. These representations are then processed by the speech adaptor, which maps them into the LLM’s embedding space through downsampling and a two-layer perceptron. The LLM, based on Llama-3.1-8B-Instruct, generates text responses directly from the speech instruction. The speech decoder, a non-autoregressive streaming Transformer, takes the LLM’s output hidden states and uses connectionist temporal classification (CTC) to predict discrete units corresponding to the speech response.

The model employs a two-stage training strategy. In the first stage, it learns to generate text responses from speech instructions. The second stage focuses on generating speech responses, with only the speech decoder being trained. During inference, LLaMA-Omni simultaneously generates text and speech responses. As the LLM produces text, the speech decoder generates corresponding discrete units, which are then converted into speech waveforms in real-time. This approach enables extremely low-latency speech interaction, with users able to hear responses before the complete text is generated.

The InstructS2S-200K dataset was created to train LLaMA-Omni for speech interaction. It consists of 200,000 triplets of speech instructions, text responses, and speech responses. The construction process involved rewriting text instructions for speech using Llama-3-70B-Instruct, generating concise responses suitable for speech, and synthesizing speech using CosyVoice-300M-SFT for instructions and VITS for responses. The dataset combines 50,000 entries from Alpaca and 150,000 from UltraChat, covering diverse topics. This specialized dataset provides a robust foundation for training LLaMA-Omni in speech-based tasks, ensuring natural and efficient interactions.

LLaMA-Omni outperforms previous models in speech interaction tasks, as demonstrated by results on the InstructS2S-Eval benchmark. It excels in both content and style for speech-to-text and speech-to-speech instruction, achieving better alignment between speech and text responses. The model offers a trade-off between speech quality and response latency, with latency as low as 226ms. LLaMA-Omni’s simultaneous text and speech generation results in significantly faster decoding times compared to other models. Case studies show that LLaMA-Omni provides more concise, detailed, and helpful responses suitable for speech interaction scenarios, outperforming previous models in this context.

LLaMA-Omni, an innovative model architecture, has been developed to enable high-quality, low-latency speech interaction with LLMs. Built upon the Llama-3.1-8B-Instruct model, LLaMA-Omni incorporates a speech encoder for understanding and a streaming speech decoder for simultaneous text and speech response generation. The model’s alignment with speech interaction scenarios was achieved through the creation of InstructionS2S-200K, a dataset containing 200,000 speech instructions and responses. Experimental results demonstrate LLaMA-Omni’s superior performance in both content and style compared to existing speech-language models, with a remarkably low response latency of 226ms. The model’s efficient training process, requiring less than 3 days on 4 GPUs, facilitates the rapid development of speech interaction models based on cutting-edge LLMs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

⏩ ⏩ FREE AI WEBINAR: ‘SAM 2 for Video: How to Fine-tune On Your Data’ (Wed, Sep 25, 4:00 AM – 4:45 AM EST)

Asjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.